The block opens the recognition window and shows the machine learning analysis on the camera feed. Very good for visualization of the model in PictoBlox.

The block opens the recognition window and shows the machine learning analysis on the camera feed. Very good for visualization of the model in PictoBlox.

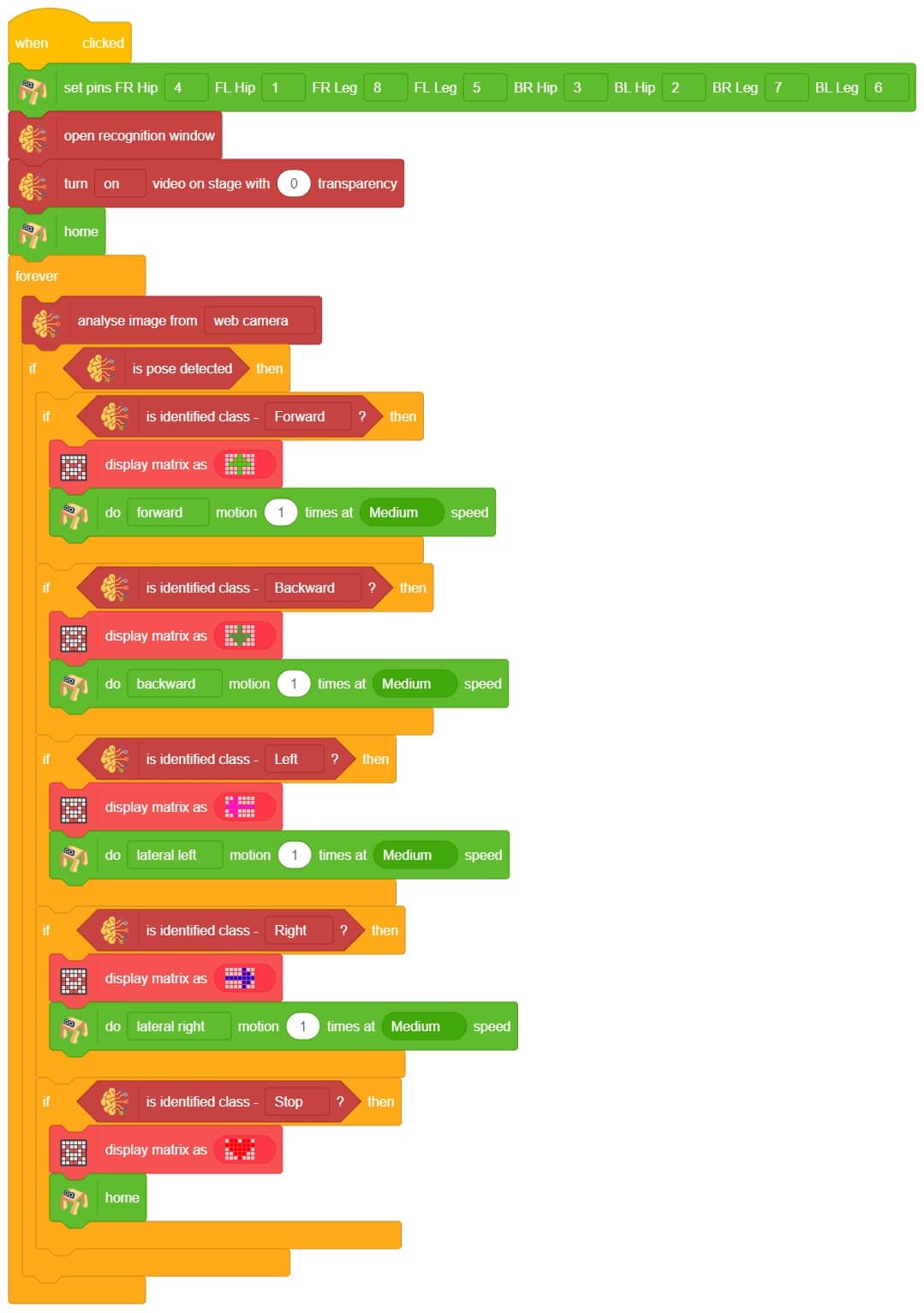

This project demonstrates how to use Machine Learning Environment to make a machine–learning model that identifies the hand gestures and makes the Quadruped move accordingly. learning model that identifies the hand gestures and makes the qudruped move accordingly.

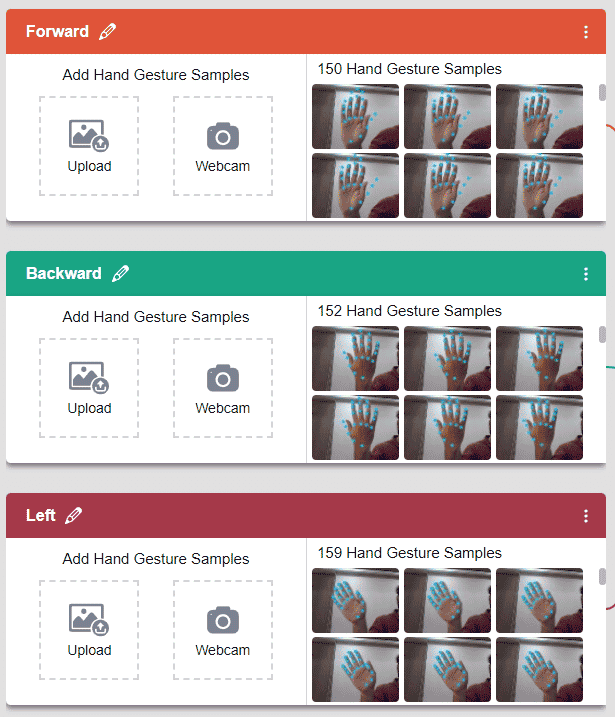

We are going to use the Hand Classifier of the Machine Learning Environment. The model works by analyzing your hand position with the help of 21 data points.

Follow the steps below:

There are 2 things that you have to provide in a class:

You can perform the following operations to manipulate the data into a class.

We are going to use the Hand Classifier of the Machine Learning Environment.

After data is added, it’s fit to be used in model training. In order to do this, we have to train the model. By training the model, we extract meaningful information from the hand pose, and that in turn updates the weights. Once these weights are saved, we can use our model to make predictions on data previously unseen.

The accuracy of the model should increase over time. The x-axis of the graph shows the epochs, and the y-axis represents the accuracy at the corresponding epoch. Remember, the higher the reading in the accuracy graph, the better the model. The range of the accuracy is 0 to 1.

The model will return the probability of the input belonging to the classes.You will have the following output coming from the model.

The Quadruped will move according to the following logic:

Copyright 2026 – Agilo Research Pvt. Ltd. All rights reserved – Terms & Condition | Privacy Policy