Introduction

You’ve made mobile robots and have controlled them using your keyboard, smartphone, joystick, and whatnot. This time we’re going to level up, channel out our inner Jean Grey, and control them using the hand gestures.

Learn how to control a 2-Wheel Drive Robot with hand gestures using PictoBlox AI’s Machine Learning extension.

Ready? Set. AI!

Prerequisites to make the Gesture Controlled Robot

You’ll need the following things to make the gesture-controlled robot:

- A 2-wheel drive robot.

- A laptop or a computer with a camera

- The latest version of PictoBlox

- A good Internet connection.

To make the 2-wheel drive robot, visit the link here to know-how.

Training the models.

To work with the Machine Learning we first need to train our models to recognize the gestures from the stage i.e. our camera feed.

- Visit https://teachablemachine.withgoogle.com/ to train the models.

- Click the Get Started button. A new page will open.

- Select the Image Project tile as we will be training the model with images of our hand-gestures.

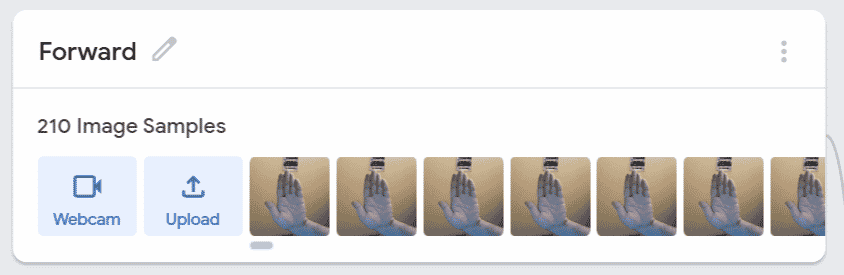

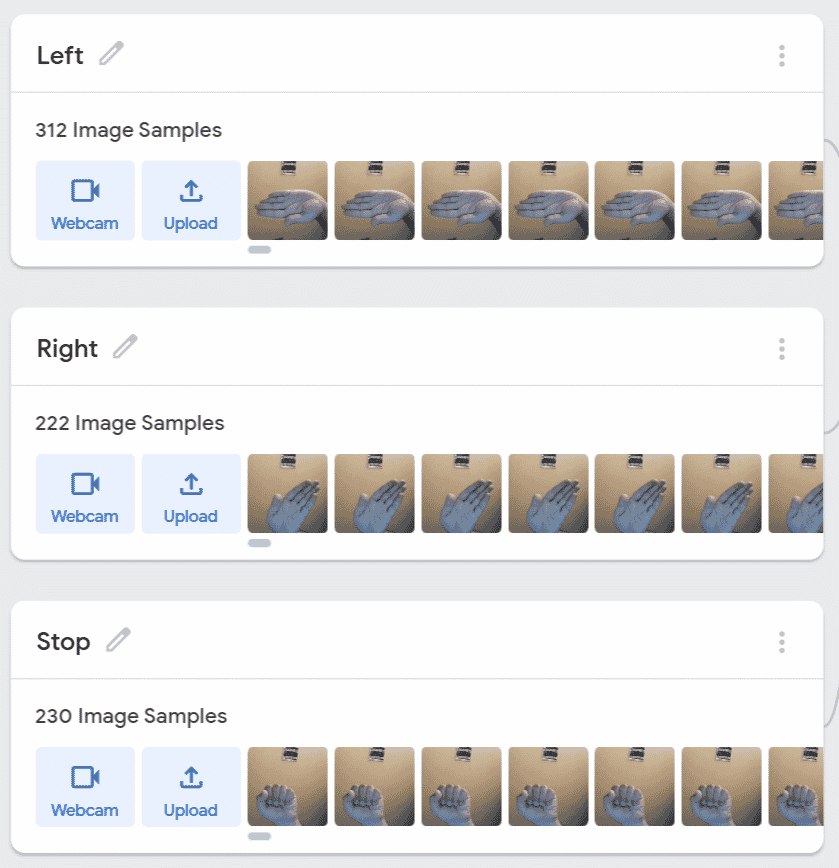

- Let’s add the first class. You store your sample images into the classes either by using a webcam or by uploading it. In this case, we will be adding our sample images using the Webcam, thus click on the Webcam button to start the camera.

- We’ll first make the class for the robot to move forward, thus, keep our palm open and click on Hold to Record to record the images.

- We will make four classes:

- Similarly, make the other three classes too.

With this, you’ve entered the sample image to train the data. We’ll now train the model.

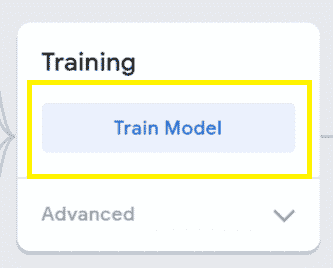

Training the model

- Click the Train Model button and wait for the model to get trained.

- You can have a look/test the trained model.

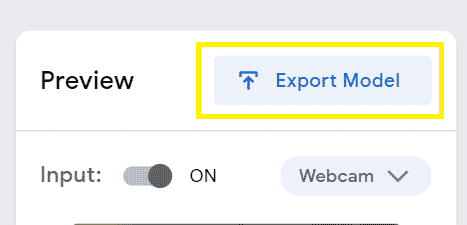

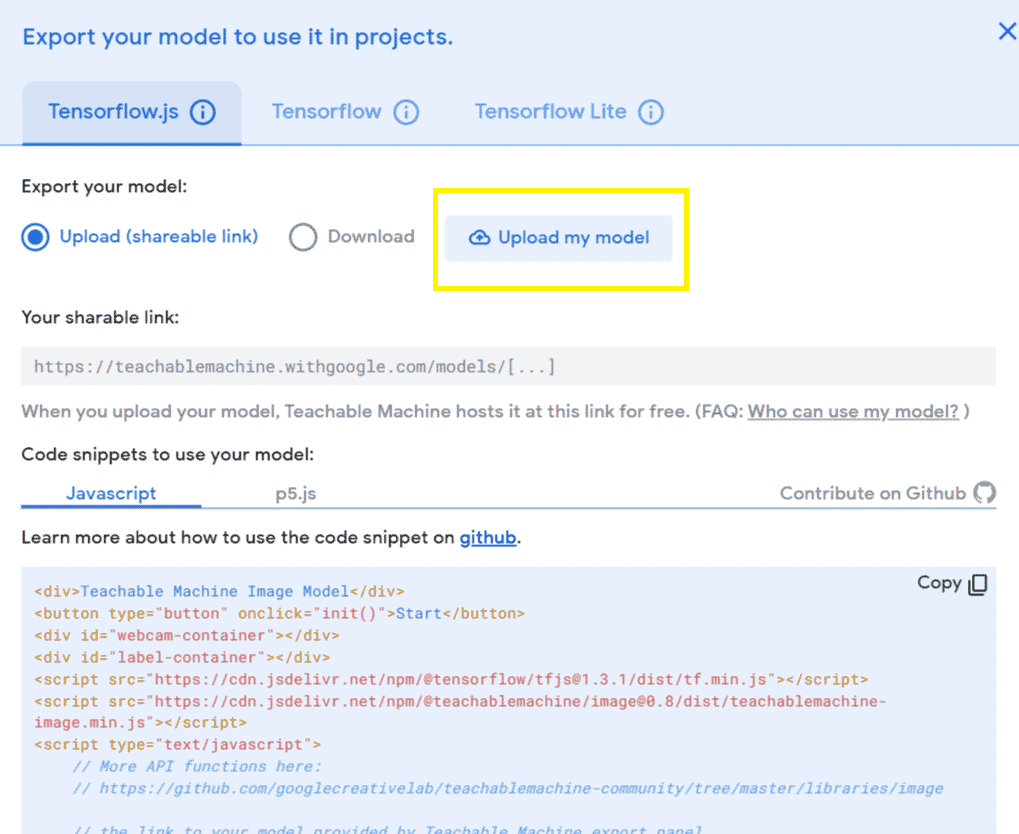

Exporting the Trained Model

Now, that the model is perfect, let’s export the model.

- Click on the Export Model button.

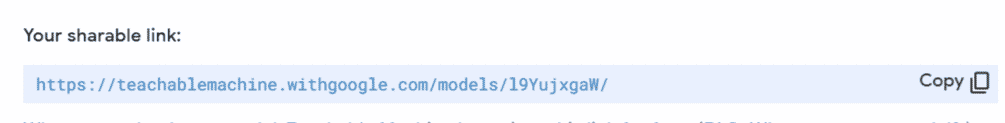

- A popup will open. Click the Upload my model button.

- A sharable link will appear, Copy it.

Writing the Script

Now that the models are loaded. Let’s begin by writing the script.

Setting up the Project

- Open PictoBlox we will first add the Machine Learning Extension.

- To work with the all-new AI and ML extension, you need to keep two things in mind:

- That you must sign in / login in PictoBlox from here.

- You must have a good internet connection.

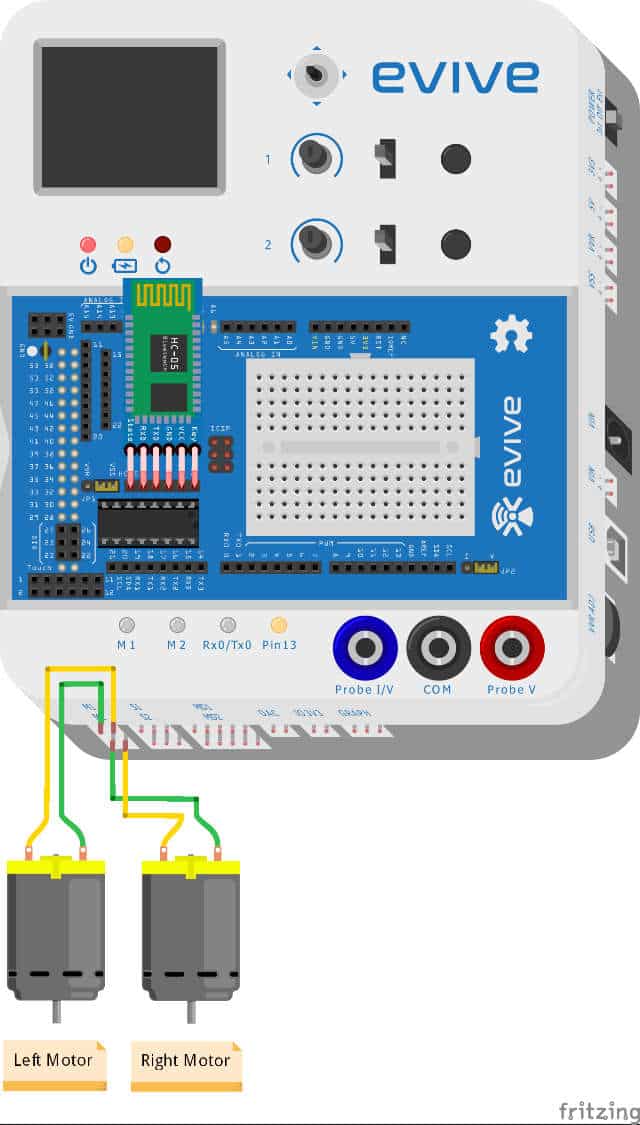

- Click on the board button and select evive.

- If you using the evive board for the first time, you need to upload the firmware. Click on the link here above to know-how.

- We have already uploaded the firmware to our robot. We now need to add the Machine Learning extension.

- Click the Add Extension button and select the Machine Learning extension.

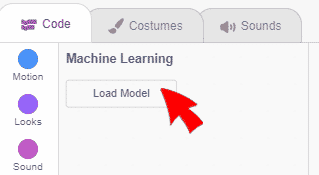

- Click the Load Model button.

- A pop-up will open. Paste the link that you copied from the Teachable machine and click the Load Model button.

You may now see that the Machine Learning blocks are loaded.

Setting Up the Stage and evive’s screen

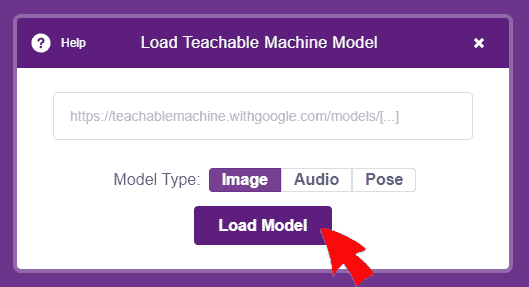

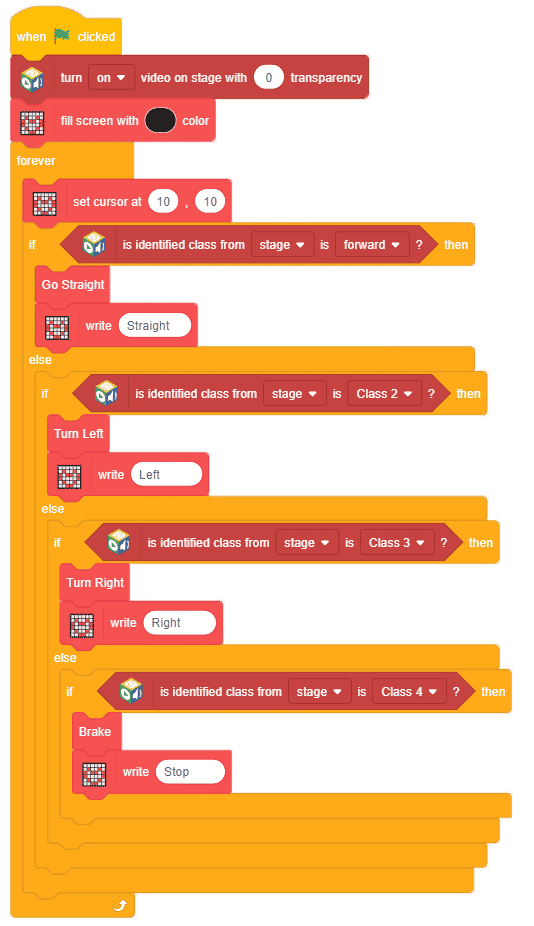

- First, drag-and-drop the when flag clicked hat block from the Events palette to execute the program when the green flag is clicked.

- As we need to recognize the hand gestures from the camera feed, place the turn () video on stage with 0 transparency block from the Machine Learning palette.

- We will set the evive’s display to black color. Thus, place fill screen with () color from the display palette.

- Place a forever block to make the code run continuously.

- From the display palette, drag and drop the set cursor at () () block to start writing from the 10,10 every time we need to write something on the screen.

Hand Gesture Controlled Robot

Hand Gesture Controlled Robot

Assigning Actions

Next, we will recognize the gestures from the stage and perform actions accordingly.

Thus, let’s make a few custom function blocks.

- Go to My Blocks palette.

- Click on Make a Block button.

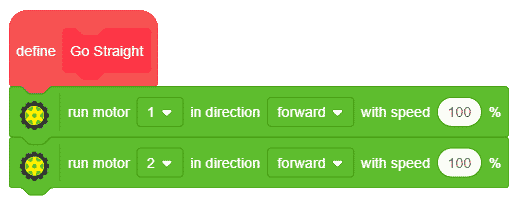

- First, we will make the function to make the robot move forward. Thus, write go straight into the name and click on OK.

- You’ll see a hat block onto the scripting area. Place the run motor () in direction () with speed () % block from the actuators block.

- Duplicate the above block and place it below it and choose 2 from the first drop-down.

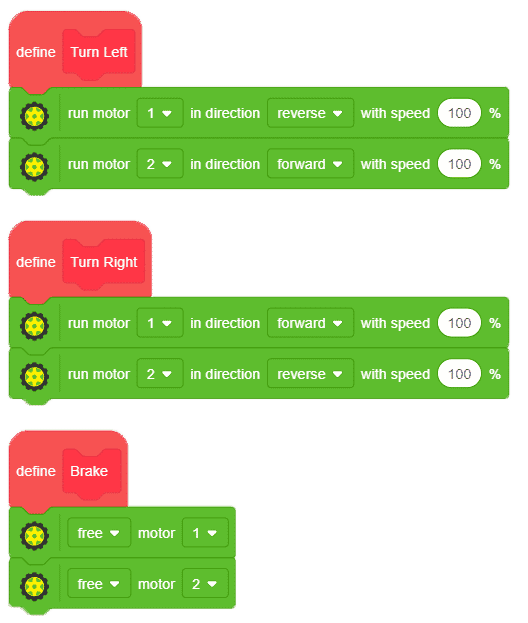

- Similarly, we will make the custom blocks to Turn Left, Turn Right, and Brake one by one.

- Duplicate the two motor blocks and place them below Turn Left and Turn Right custom blocks.

- Let’s make a few changes into these blocks.

- Into the Turn Left block, select reverse from the second drop-down of the first block.

- Into the Turn Right block, select reverse from the second drop-down of the second block.

- Into the Brake block, drag and drop the free motor 1 block from the actuators palette. Duplicate it and choose 2 from the second block.

Completing the main script

Now, let’s move onto the main script.

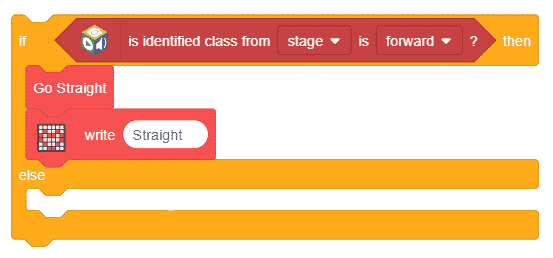

- Place an if-else block from the controls palette below the set cursor block.

- Drag and drop the is identified class from () is () block from the machine learning palette and choose stage from the drop-down and forward from the second drop-down. This will let us recognize the forward class from the trained model.

- Place the go straight block into the if arm.

- Right, Straight on the evive’s screen using the write() block from the display palette.

- Duplicate the if block and place it into the else arm.

- Select left from the if block and replace go straight block with the turn left block. Write Left onto evive’s display.

- Repeat the same process for right and stop.

The script is now complete, click on the green flag to run the script.

Conclusion

With this, your AI gesture-controlled robot is set to rock ‘n’ roll. Enjoy!

![[CODEAVOUR 2021] project demonstration video 4-12 screenshot [CODEAVOUR 2021] project demonstration video 4-12 screenshot](https://ai.thestempedia.com/wp-content/uploads/elementor/thumbs/CODEAVOUR-2021-project-demonstration-video-4-12-screenshot-q7ynxvlv2t918md2w9xsnjiaauhor82z0jm2g1in34.png)