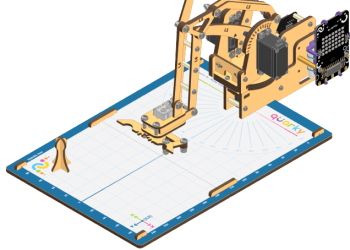

Learn how to assemble the Quarky Robotic Arm with this step-by-step guide. Follow the steps to make the Quarky Robotic Arm look like the image shown in this guide, and use it to explore more complicated programs and activities.

Learn how to assemble the Quarky Robotic Arm with this step-by-step guide. Follow the steps to make the Quarky Robotic Arm look like the image shown in this guide, and use it to explore more complicated programs and activities.